Why we need distributed Logging ???????

Stratos is a distributed clustered setup where we have several applications such as ESB Servers,Application Servers, Identity Servers, Governance Servers, Data Services Sever etc deployed together to work with each other to serve as Platform as a Service. Each of these servers are deployed in a clustered environment, where there will be more than one node for a given server and depending on the need, there will be new nodes spawned dynamically inside this cluster. And all these servers are fronted through an Elastic load balancer and depending on the request the load balancer will send requests to a selected node in a round robin fashion.

What would you do when there is an

error occurs in a deployment like above where there are 13 different

types of servers running in production and each of these servers are

clustered and load balanced across 50+ servers?. This would be a

nightmare for the system administrators to log-in into each server

and grepping for the logs to identify the exact caused of the error.

This is why distributed application deployment's needs to keep a centralized

application logs. These centralize logs should also be kept in a high scalable data storage in an ordered manner with easy access.So that the users (administrators,developers) can easily accesses logs, whenever something goes unexpected, with the least amount of filtering to pinpoint the exact cause of the issue.

When designing a logging

system like above, there are several things you need to consider.

- Capturing the right information inside the LogEvent – You have to make sure all the information you need in order to monitor your logs is aggregated in the LogEvent. For example in a cloud deployment setup you have to make sure not only the basic log details(logger,date,log level) are not enough to point a critical issue. You further needs tenant information (user/domain), Host information (to identify which node is sending what), Name of the server (from which server you are getting the log) etc. These information is very critical when it comes to analyzing and monitor logs in an efficient way.

- Send logs to a centralized system in a nonblocking asynchronous manner so that monitoring will not affect the performance of the applications.

- High availability and Scalability

- Security – Stratos can be deployed and hosted in public clouds therefore, its important to make sure the logging system is high secured.

- How to display system/application logs in an efficient way with filtering options along with log rotation.

Those are the 5 main aspects which were

mainly concerned when designing the distributed logging architecture.

Since Stratos support multitenancy we made sure that logs can be

separated by tenants, services, and applications.

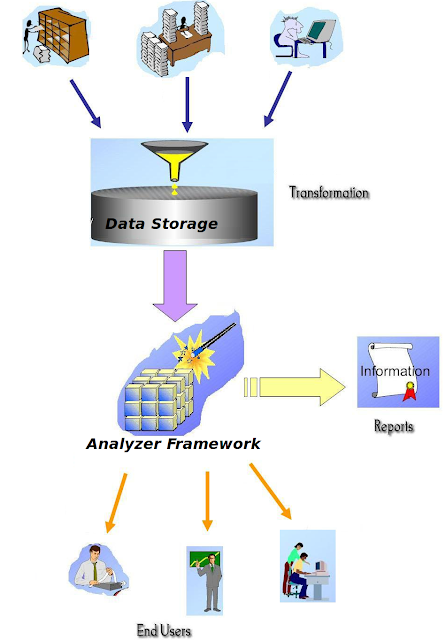

MT-Logging with WSO2 BAM 2.0

WSO2 BAM 2.0 provide a rich set of tool for

aggregation, analyzing and presentation for large scale data sets and any monitoring scenario can be easily modeled according to the BAM architecture. We selected WSO2 BAM as the backbone of our logging architecture mainly because it provides high performance with non intrusiveness along with high scalability and security. Since those are the crucial factors essential for a distributed logging system WSO2 BAM became the idol candidate for MT-Logging architecture.

Publishing Logs to BAM

We implemented a Log4JAppender to send LogEvents to bam. There we used BAM Data agents get Log Data across to BAM. BAM data agents send data using thrift protocol which gives us high performance message through put as well as it is non blocking and asynchronous. When publishing Log events to BAM we make sure the Data Stream is created per tenant, per server, per date. When the data stream is initialized there will be a unique column family created per tenant, per server per date and the logs will be stored in that column family in a predefine keyspace in cassandra cluster.

The Data stream defines the set of information which needs to be stored for a particular LogEvent and can

be modeled into a Data Model.

Data Model which is used for Log Event.

{'name':'log. tenantId. applicationName.date','version':'1.0.0', 'nickName':'Logs', 'description':'Logging Event',

'metaData':[{'name':'clientType','type':'STRING'}

],

'payloadData':[

{'name':'tenantID','type':'STRING'},

{'name':'serverName','type':'STRING'},

{'name':'appName','type':'STRING'},

{'name':'logTime','type':'LONG'},

{'name':'priority','type':'STRING'},

{'name':'message','type':'STRING'},

{'name':'logger','type':'STRING'},

{'name':'ip','type':'STRING'},

{'name':'instance','type':'STRING'},

{'name':'stacktrace','type':'STRING'}

] }

We extend

org.apache.log4j.PatternLayout

a in order to capture tenant information, server

information and node information and wrap it with log4j LogEvent.

Log Rotation and Archiving

Once we send the log events to BAM the logs will be saved in a Cassandra cluster. WSO2 BAM provides a rich set of tools to create analytic and schedule task. Therefore, we used these hadoop task to rotate logs daily and archive them and store it in a secure environment. In order to do that we use a hive query which will run daily as a cron job. It will read Cassandra data store, retrieve all the column families per tenant per application and archive them in to gzip format.

The hive Query which is used to rotate

logs daily

set logs_column_family = %s;

set file_path= %s;

drop table LogStats;

set mapred.output.compress=true;

set hive.exec.compress.output=

set mapred.output.compression.

set io.compression.codecs=org.

CREATE EXTERNAL TABLE IF NOT EXISTS LogStats (key STRING,

payload_tenantID STRING,payload_serverName STRING,

payload_appName STRING,payload_message STRING,

payload_stacktrace STRING,

payload_logger STRING,

payload_priority STRING,payload_logTime BIGINT)

STORED BY 'org.apache.hadoop.hive.

WITH SERDEPROPERTIES ( "cassandra.host" = %s,

"cassandra.port" = %s,"cassandra.ks.name" = %s,

"cassandra.ks.username" = %s,"cassandra.ks.password" = %s,

"cassandra.columns.mapping" =

":key,payload_tenantID,

payload_serverName,payload_

payload_stacktrace,payload_

payload_logTime" );

INSERT OVERWRITE DIRECTORY 'file:///${hiveconf:file_path}

select

concat('TID[',payload_

'Server[',payload_serverName,'

'Application[',payload_

'Message[',payload_message,']\

'Stacktrace ',payload_stacktrace,'\t',

'Logger{',payload_logger,'}\t'

'Priority[',payload_priority,'

concat('LogTime[',

(from_unixtime(cast(payload_

ORDER BY LogTime

Once we archived the logs we will send

these archived logs to HDFS file system. The archived logs can be further analysed using map-reduce jobs, for long term data analytics

Advantages of sending Logs to WSO2 BAM

- Asynchronous and None Blocking Data publishing

- Receives and Stores Log Events Cassandra Cluster which is high scalable and a big Data Repository

- Rich tools set for analytics

- Can be shared with CEP for real time Log Event analysis.

- Can provide Logging tool boxes and dashboards for system administrators using WSO2 BAM

- High performance and non-intrusiveness

- Big data analysis

- Daily log information analytic - Analyse cassandra data storage

- Long term log information - Analyse HDFS file system using map-reduce

Monitoring and Analyzing System Logs

- Using the Log ViewerBoth application and system logs can be displayed using the management console of a given product. Simply log-in to Management console and under monitor there are two links 1. System logs which has system logs of the running server 2) Application Logs which has application level logs (this can be services/web applications) for a selected application. This makes it easy for users to filter logs by the application they develop monitor logs up to application level.

- Dashboards and ReportsSystem administrators can log-in to WSO2 BAM and create their own dashboards and reports, so the can monitor their logs according to their Key performance Indicators. For example if they want to monitor number of fatal errors occur per given month for a given node.

View Logs Using the Log Viewer - Current Log

View Logs Using the Log Viewer - Archived Logs

All these rich set of monitoring

capabilities can be in built into your deployment using Stratos

Distributed Logging system. Where you don’t have to worry about

always going to the system administrators for logs whenever something

goes wrong in your application :).