Prerequisites

Carbon 4.0.0 and above productWSO2 BAM 2.0.0 and above

Apache Server installed

Introduction

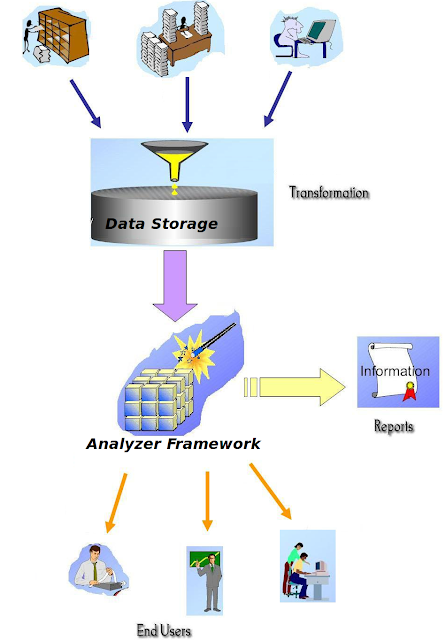

Stratos MT-Logging architecture provides a logging frame work to send logs to BAM. Which opens wide variety of possibilities when it comes to monitoring logs. In my previous article I have explained the architecture of Distributed Logging with WSO2 BAM, this tutorial explains how you can set up logging effectively for any WSO2 Product and how you can analyze and monitor logs effectively.Architecture

Setting up Hadoop Server to host archived log files

Once the logs are sent to BAM, we analyse the logs daily and send them to a file system. For better performance for archive logs analytic, we send archive logs to HDFS file system. So we can analyze archive logs using map reduce task (big data, long term data analysis).Please refer How to Configure Hadoop to see how we can configure a hadoop cluster, Once you have hadoop cluster you can give your hdfs information in summarizer-config.xml. So it will automatically analyse your daily logs and send them to HDFS file system

Summarizer Configuration for log archiving.

<cronExpression>0 0 1 ? * * *</cronExpression>

<tmpLogDirectory>/home/usr/temp/logs</tmpLogDirectory>

<hdfsConfig>hdfs://localhost:9000</hdfsConfig>

<archivedLogLocation>/stratos/archivedLogs/</archivedLogLocation>

<bamUserName>admin</bamUserName>

<bamPassword>admin</bamPassword>

cronExpression - The schedule time, that summarizer runs daily

hdfsConfig - hdfs file server intimation

archivedLogLocation - HDFS file patch which the archived logs should be saved

archivedLogLocation - HDFS file patch which the archived logs should be saved

Setting up Log4jAppender - Server Side (AS/ESB/GREG/etc)

To publish log events to BAM, log4j appender should be configured in each server. In order to do that you need to add LogEvent to the root logger and configure the LogEvent credential accordingly.Add LogEvent to the root logger in log4j

Go to Server_Home/repository/con

-> log4j.properties and LOGEVENT to log4j root logger (or replace the

following line)

log4j.rootLogger=INFO, CARBON_CONSOLE, CARBON_LOGFILE, CARBON_MEMORY, CARBON_SYS_LOG,LOGEVENT

log4j.rootLogger=INFO, CARBON_CONSOLE, CARBON_LOGFILE, CARBON_MEMORY, CARBON_SYS_LOG,LOGEVENT

Add

Data publishing URLs and credentials

Go to Server_Home/repository/con -> log4j.properties. Modify LOGEVENT appender’s LOGEVENT.url as BAM Server thrift URL,LOGEVENT.userName, .LOGEVENT.password

Go to Server_Home/repository/con -> log4j.properties. Modify LOGEVENT appender’s LOGEVENT.url as BAM Server thrift URL,LOGEVENT.userName, .LOGEVENT.password

log4j.appender.LOGEVENT=org.wso2.carbon.logging.appender.LogEventAppender

log4j.appender.LOGEVENT.url=tcp://localhost:7611

log4j.appender.LOGEVENT.layout=org.wso2.carbon.utils.logging.TenantAwarePatternLayout

log4j.appender.LOGEVENT.columnList=%T,%S,%A,%d,%c,%p,%m,%H,%I,%Stacktrace

log4j.appender.LOGEVENT.userName=admin

log4j.appender.LOGEVENT.password=admin

Enabling the Log Viewer

When the log viewer is not enable to take logs from cassandra the default behaviour of the log viewer to take logs from the carbon memory. It will only display the most recent logs of the carbon server. To get persistence logs (logs which are coming from the current date) you need to enable isLogsFromCassandra true so that you can view persistance logs through the management console of any carbon server (ESB/DSS/AS etc) . And also you need to give the user credentials of the cassandra server as shown below.

Change Logging-Config.xml to View Logs from BAM.

Got to Server_Home/repository/con/etc-> Logging-config.xml

Enable isDataFromCassandra

Give cassandra url of BAM Server

Give BAM Server user credentials to access Cassandra Server in BAM

<userName>admin</userName>

<password>admin</password>

<password>admin</password>

Give hadoop hdfs hosted url for the logs viewer

<archivedHost>hdfs://localhost:9000</archivedHost>

<archivedHDFSPath>/stratos/logs</archivedHDFSPath>

Setting up Logging Analyzer - WSO2 BAM Side

Setting up BAM

Bind IPs for cassandra {This is not logging related, this is just to bind an ip address to cassandra so that cassandra will not start in localhost}

Copy cassandra.yaml from {WSO2_BAM_HOME}/repository/components/features/

org.wso2.carbon.cassandra.server_4.0.1/conf/cassandra.yaml to repository/conf/etc. Change the

IP address (localhost) to the correct ip address of BAM of listen_address and rpc_address

Copy cassandra-component.xml from {WSO2_BAM_HOME}/repository/components/

features/org.wso2.carbon.cassandra.dataaccess_4.0.1/conf/cassandra-component.xml to

repository/conf/etc. Change the IP address (localhost) to the correct ip address of BAM of

Installing Logging Summarizer

Download P2 Profile which will contain Logging summarizer features. Install logging.summarizer feature through Management Console (Go to Configure -> Features and Click on Add Repository). Once you add the repository you will be redirected to a page which contains available features. Select bam summarizer feature and install it.

Change the logging config.xml

<isDataFromCassandra>true</isDataFromCassandra>

Change log rotation paths, give the log directory as apache log rotation directory, and give BAM username password credentials

<publisherURL>tcp://localhost:7611</publisherURL>

<publisherUser>admin</publisherUser>

<publisherPassword>admin</publisherPassword>

<publisherUser>admin</publisherUser>

<publisherPassword>admin</publisherPassword>

<logDirectory>/home/usr/apache/logs/</logDirectory>

<tmpLogDirectory>/home/usr/temp/logs</tmpLogDirectory>

<tmpLogDirectory>/home/usr/temp/logs</tmpLogDirectory>

Point BAM to external hdfs file server

ow the logging is configured in both publisher and receiver, and you can view your logs by log-in into

In order to point the analyzers to the hdfs file system you need to update BAM_HOME/repository/conf/

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

Now the logging is configured in both publisher and receiver, and you can view your logs by log-in into management console. And view System Logs - This will view the current logs as well as archived logs taken from the apache server. Logs are daily archived to the apache server through a cron job.

If you want to analyze logs using hive analytics, and display in dashboards you can use bam analytics tools and dashboard tool kits to customize Logging KPIs for system administration.